Generative AI has officially moved from the "innovation lab" to the P&L statement. If a digital storefront still relies on static catalogs and deterministic keyword matching in 2026, it is not merely behind — it is invisible.

The era of "browsing" is dead. The era of "curation" is here.

Optimization strategies that rely on A/B testing button colors or tweaking H1 tags reached their ceiling years ago. The new frontier of revenue growth is built on dynamic, real-time adaptation. AI in E-Commerce is no longer a competitive differentiator; it is the infrastructure required for survival.

Here is how enterprise-grade technical strategies are leveraging these tools to drive a projected 25% lift in global conversion rates this year.

The friction of typing specific keywords and filtering by "Price: Low to High" is now unacceptable. In 2026, discovery is driven by Semantic Search and Vector Embeddings.

Modern architecture has shifted from keyword-based indexing to intent-based understanding. Customers no longer search; they prompt. A query like "I need a waterproof jacket for a hike in Seattle that looks good at dinner" requires a system capable of parsing context, weather data, and aesthetic preferences simultaneously.

By implementing Intelligent Search & Discovery solutions, platforms can reduce bounce rates by nearly 40%. When the tech stack understands the user's intent rather than just matching text strings, the path to checkout shortens dramatically.

One-size-fits-all content is a conversion killer. Previously, scaling personalization required massive creative teams. Today, Generative AI handles dynamic asset creation in milliseconds.

Hyper-personalization now implies the real-time generation of product descriptions, lifestyle imagery, and video assets tailored to specific user segments.

McKinsey data indicates that companies mastering this level of "segment-of-one" marketing generate 40% more revenue from personalization than their peers. This is not just marketing; it is automated, scalable relevance.

The most significant architectural shift in 2026 is the rise of Agentic Commerce.

Legacy decision-tree chatbots have been replaced by autonomous custom AI agents. These agents possess the agency to negotiate, cross-sell, and resolve complex logistics queries without human intervention.

If a user hesitates on a high-ticket item, the agent analyzes sentiment and session data to offer a precision incentive — such as free shipping or a tailored bundle — to convert the sale instantly. According to Gartner, nearly 30% of new customer interactions are now handled by agents that exceed human support metrics in speed and accuracy.

The operational reality is stark: None of this works without clean data.

Deploying a state-of-the-art Large Language Model (LLM) on top of siloed or dirty data results in hallucination and revenue loss. The system will recommend winter gear in July or offer discounts on out-of-stock inventory.

Successful AI in E-Commerce strategies relies on a modernized data mesh. This requires a unified view of inventory, customer history, and real-time market signals.

Retailers are adopting modern architectural standards to ensure models are fed accurate, real-time context. To keep models sharp and conversions high, rigorous MLOps is non-negotiable. This is why our Cloud & DevOps Services prioritize the backend infrastructure — ensuring the AI remains functional, safe, and profitable.

The winners in this landscape are not the ones with the deepest discounts. They are the ones who remove the friction of discovery entirely.

To get there, you need more than just a generic chatbot or a standard recommendation plugin. You need a platform built for real-time semantic search, autonomous agents, and high-velocity data processing.

At Opinov8, we don’t just integrate AI models; we engineer revenue engines. Whether you need to modernize a legacy backend for high-speed inference or build a greenfield agentic commerce system, we are the partner that gets you to market faster.

Let’s talk about your e-commerce roadmap.

The Software as a Service (SaaS) model has long ceased to be just a delivery method; by 2026, it will have become the fundamental operating system of the modern enterprise. With the global SaaS market projected to exceed $300 billion this year, fueled by the widespread adoption of AI-driven architectures and autonomous agents, the barrier to entry has never been lower—but the barrier to sustained success has never been higher.

For the last decade, giants like Salesforce and Dropbox defined the playbook. Today, however, the rules have changed. Success in 2026 isn't just about moving to the cloud; it’s about intelligent specialization, sustainable unit economics, and infrastructure that can handle the next generation of compute-heavy workloads.

Here are the critical aspects that distinguish thriving SaaS organizations from those that stall.

We often use the acronym "SaaS" without pausing to reflect on the "Service" component. In the rush to capture market share, many newcomers fall into the trap of becoming "Software as Many Services" (SaMS). They attempt to be a Swiss Army knife, adding disparate features, from CRM to project management, hoping something sticks. In 2026, this generalist approach is a fast track to failure.

Successful SaaS companies today are defined by vertical depth. The market has shifted toward domain-specific solutions where AI models are trained on highly specialized data. Just as early winners found a single need and perfected it, modern leaders are building hybrid cloud infrastructures that solve specific, high-value problems with precision. You cannot be the operating system for everyone; you must be the mission-critical engine for someone.

When a SaaS startup launches, the focus is often on survival — securing the next month of runway. However, a growth strategy that works for 1,000 users often breaks at 100,000. In 2026, scaling isn't just about adding more servers; it’s about architectural resilience and financial predictability.

As you expand, your infrastructure costs, now heavily influenced by AI compute usage, can spiral if not managed correctly. Leaders must adopt a cloud-managed platform approach early on. This ensures that as your user base grows, your technical debt doesn't grow with it. A company that lacks a roadmap for infrastructure scalability is building a skyscraper on a foundation of sand.

The "freemium" model was the darling of the 2010s, propelling companies like Slack to stardom. But in 2026, offering a free product and hoping for viral adoption is not a marketing strategy. The market is saturated, and user attention is scarce.

Modern cloud computing trends suggest that buyers are more scrutinized than ever. If users don’t understand the immediate value of your product, a free tier won't save you. Marketing today requires a sophisticated mix of Product-Led Growth (PLG) and targeted value demonstration. You must educate the market on why your solution exists and how it solves their specific pain point better than the noise. Accessibility allows people to try your product; only clear value positioning gets them to stay.

While user acquisition grabs headlines, retention builds empires. A SaaS company cannot sustain itself if its user base remains perpetually in the "free" tier. The economics simply don't work, especially with the rising costs of delivering intelligent, agentic features.

Conversion in 2026 is about demonstrating an undeniable ROI. It may be necessary to limit free-tier capabilities or move toward usage-based pricing models to ensure that revenue scales with value delivery. If your sales velocity cannot support your infrastructure and support costs, the business model is broken. The goal is to turn users into partners who see your software as essential to their own success.

The SaaS landscape of 2026 requires a blend of technical excellence and strategic focus. It demands that you stay niche, build for scale, market with intent, and monetize with precision.

As you navigate these complexities, having the right technical partner can make the difference between stagnation and market leadership. At Opinov8, we specialize in helping companies architect scalable platforms, modernize legacy systems, and integrate the intelligent technologies required to compete in today's market.

Whether you are building a new SaaS product from scratch or need to re-platform for the AI era, our team is ready to help you engineer your digital future.

Get a free consultation to discuss your SaaS strategy

Technology has long promised to streamline collaboration, yet for years, human bottlenecks, endless synchronization meetings, fragmented data context, and manual administrative overhead, kept true efficiency out of reach, creating a critical gap that AI Agents are now filling.

By 2026, the narrative has shifted. We are no longer talking about simple "productivity tools" that shave seconds off a task. We have entered the era of agentic workflows and digital coworkers. The integration of Artificial Intelligence into team dynamics is no longer about assisting humans; it’s about autonomous agents proactively managing the "work about work," allowing human teams to focus entirely on high-value strategy and innovation.

Here is how advanced AI ecosystems are fundamentally changing how high-performance teams operate today.

In the early 2020s, AI scheduling tools could merely read calendars and suggest times. Today, autonomous agents handle the entire lifecycle of collaboration.

It is not just about finding a slot; it is about context. Modern AI agents function as active participants in project management. They monitor project velocity, identify when a critical decision is blocked, and autonomously schedule a "huddle" with the exact right stakeholders, preparing an agenda based on the code repository’s recent commits or the design team’s latest updates.

According to insights from Microsoft’s WorkLab, we are witnessing a transition where AI agents act as "digital coworkers," capable of handling complex, multi-step coordination tasks without human intervention. This eliminates the cognitive load of "managing" the schedule, ensuring that meetings only happen when they are algorithmically determined to be necessary for unblocking progress.

The biggest killer of team velocity has always been the "context switch" — stopping to find a document, recall a decision made three months ago, or explain a legacy codebase to a new joiner.

Generative AI has evolved from simple text prediction to sophisticated Retrieval-Augmented Generation (RAG) systems that serve as a collective institutional brain. Teams no longer search for files; they query the project context. An engineer can ask, "Why did we choose this architecture for the payment gateway?" and receive a synthesized answer citing Slack conversations, Jira tickets, and architectural diagrams from last year.

However, relying on these systems requires a solid foundation. You cannot build a high-performing AI layer without a robust underlying architecture. As detailed in our guide on hybrid cloud infrastructure, ensuring your data is accessible yet secure across environments is the prerequisite for these AI agents to function effectively. Without the right infrastructure, even the smartest agents remain blind.

High-functioning teams in 2026 are data-driven, not gut-driven. AI is now deeply embedded in resource planning, using historical data to predict burnout risks or project slippage weeks before a human manager would notice.

For example, by analyzing commit patterns and communication sentiment, AI can flag when a team is over-indexing on technical debt rather than feature work. This visibility allows leadership to pivot resources dynamically.

This level of insight is powered by advancements in data science and analytics. By treating team activity as a data stream, organizations can optimize their workflows with the same precision they use to optimize their code. This shift aligns with Gartner’s strategic predictions, which foresee a flattening of organizational structures as AI assumes many of the coordination and reporting duties previously held by middle management, empowering makers to lead directly.

Collaboration is rarely just text-based. The integration of computer vision and multimodal processing allows teams to collaborate across media seamlessly.

Imagine a construction team where drones capture site progress, and an AI instantly compares the visual data against the CAD drawings to flag discrepancies for the engineering team in real-time. Or a retail team where shelf-monitoring AI autonomously generates restocking tasks in the team's project board. This moves collaboration beyond the digital realm and anchors it in physical reality.

This evolution is rewriting software work, moving developers away from writing boilerplate code to becoming "orchestrators" of these complex systems. As Forrester notes regarding TuringBots, these AI-driven entities are collapsing the software development lifecycle, allowing teams to build and deploy at speeds that were previously unimaginable.

The teams that win in 2026 are not those who work harder, but those who successfully integrate AI agents as core members of their workforce. They treat AI not as a tool to be used, but as a system to be managed.

Navigating this shift requires more than just buying a license; it requires a partner who understands the engineering depth required to build these ecosystems.

Ready to architect a workforce that scales? At Opinov8, we don't just follow trends; we build the platforms that power them. From modernizing your data infrastructure to engineering custom AI agents that fit your specific workflow, we help you turn "future of work" concepts into a deployed reality.

Let’s discuss your AI roadmap.

Cloud migration has evolved from a simple infrastructure upgrade into a critical lever for business resilience and innovation. In 2026, the conversation is no longer about "moving to the cloud" to save on hardware costs; it is about establishing a sovereign, scalable foundation capable of supporting Generative AI, real-time analytics, and rapid market adaptation.

For modern enterprises, the stakes of this transition are incredibly high. A successful migration requires a holistic strategy that accounts for financial governance, architectural modernization, and the integration of emerging technologies. Below is an expert framework for navigating this complex landscape.

The success of your cloud journey is often determined before a single line of code is moved. It begins with selecting a partner who understands your specific business context rather than one who simply applies a template. While global enterprises often default to the largest consultancies, many find that the agility and specialized focus of mid-tier providers yield superior results. Understanding the nuances between Big Tech consulting firms vs. smaller mid-tier providers is essential for leaders who need personalized attention and dedicated expertise rather than just headcount.

Simply rehosting legacy applications on cloud infrastructure, often called "lift and shift", is rarely sufficient in the current market. To unlock true value, organizations must prioritize modernization. This involves refactoring applications to leverage containerization and microservices, which are prerequisites for advanced capabilities like computer vision and intelligent automation.

By adopting these cloud-native architectures, businesses position themselves to integrate Gartner’s top strategic technology trends, such as Agentic AI and spatial computing, directly into their operational workflows.

In 2026, data is the primary asset residing in the cloud. Migration strategies must therefore be data-centric, ensuring that your architecture supports high-speed ingestion and processing. A robust cloud foundation is the bedrock for modern data science and analytics trends, enabling organizations to move from descriptive reporting to predictive modeling and automated decision-making. Without a proper data strategy, the cloud becomes merely a storage facility rather than an innovation engine.

One of the most common pitfalls in cloud adoption is spiraling costs due to a lack of governance. As consumption-based models dominate, implementing FinOps (Financial Operations) is non-negotiable. This cultural practice ensures engineering and finance teams collaborate to maximize the business value of cloud spend. Resources from the FinOps Foundation highlight that organizations with mature FinOps practices save up to 30% on their cloud bills while innovating faster.

As the digital landscape expands, so does the threat surface. Security cannot be an add-on; it must be intrinsic to the migration design. Adopting a Zero Trust architecture—where no user or application is trusted by default—is now the industry standard. Furthermore, maintaining "Digital Trust" is crucial for customer retention. According to McKinsey’s technology outlook, companies that prioritize ethical data management and robust cybersecurity frameworks significantly outperform their peers in consumer confidence.

The gap between companies that merely exist in the cloud and those that exploit its full potential is widening. At Opinov8, we specialize in bridging that gap. We don't just migrate workloads; we re-engineer your digital ecosystem to be cost-efficient, secure, and AI-ready.

Whether you need to untangle complex legacy systems or build a cutting-edge data platform, our team is ready to help you navigate the future.

Get a free consultation for your migration strategy

For decades, artificial intelligence (AI) was the "white whale" of technology — a promise always on the horizon but rarely within reach. While Dartmouth professor John McCarthy coined the term in 1955, it took nearly seventy years for the technology to mature from theoretical possibility to the foundation of today's most successful AI strategies.

For a long time, tech behemoths like Amazon and Google were the exclusive champions of this frontier. They didn't just adopt AI; they built their entire ecosystems around it. However, the landscape has shifted dramatically. In the current business climate, AI is no longer a differentiator reserved for the "Big Tech" elite; it is the baseline for survival. The era of experimentation is over. We have moved from simple automation to Intelligent Orchestration, where companies of all sizes can and must deploy agentic workflows to remain competitive.

The definition of what AI "can do" has evolved. We are no longer talking merely about Siri or Alexa understanding a voice command. We are witnessing the rise of Agentic AI — systems that don't just generate text or analyze data, but actively reason, plan, and execute tasks across complex workflows.

According to recent Microsoft insights on AI trends, the technology is shifting from being a passive tool to an active digital coworker. This leap allows businesses to move beyond static automation. For example, instead of just flagging a supply chain disruption, an AI agent can now predict the delay, identify alternative vendors, negotiate a spot-buy, and update the ERP system autonomously.

This shift requires a fundamental change in how organizations view their data. As we explore in our guide to Intelligent Orchestration, the benchmark for success is now the ability of your systems to think and pivot on their own, removing the need for constant human hand-holding.

Implementing the right AI engine today is about more than just efficiency; it’s about creating a cognitive business layer. Here is how the application of AI has matured beyond simple machine learning into a driver of core business value:

Traditional search matched keywords. Modern AI uses semantic understanding and Retrieval-Augmented Generation (RAG) to understand the intent behind a query. It doesn't just deliver a list of links; it provides answers and context, drastically reducing the friction between a customer’s need and the solution.

The days of "customers who bought this also bought that" are behind us. Today’s models utilize advanced data science and analytics to predict needs before the customer explicitly expresses them. By analyzing real-time behavioral data, AI can curate hyper-personalized experiences that feel intuitive rather than algorithmic.

As digital commerce grows, so does the noise. AI has become the primary defense against "astroturfing" and fake reviews. Advanced Natural Language Processing (NLP) models can now detect subtle patterns in syntax and posting behavior to flag inauthentic content, ensuring that your brand reputation relies on verified, genuine customer feedback.

Pricing is no longer just "dynamic" — it is predictive. AI models analyze competitor pricing, local demand surges, and even weather patterns to adjust pricing strategies in real time, maximizing revenue without sacrificing customer loyalty.

Despite the clear benefits, many organizations struggle to move from Proof of Concept (PoC) to production. The challenges often stem from fragmented data estates and a lack of governance. As noted in the Capgemini Tech Trends report, we are entering the "Year of Truth" for AI, where the focus shifts from hype to measurable, scalable impact.

To achieve this, businesses must address the foundational data challenges in AI integration. Without a clean, governed data architecture, even the most advanced AI models will fail to deliver ROI. This is where the divide between the leaders (like Google and Amazon) and the laggards widens — leaders treat data as a product, not a byproduct.

The trajectory is clear: AI is eating software, and software is eating the world. Deloitte’s technology analysis highlights that AI is restructuring tech organizations to be leaner and more strategic. The companies that hesitate now aren't just missing out on a trend; they are accumulating "intelligence debt" that will be exponentially harder to pay off in the future.

Making the technological investment today does more than just modernize your stack — it future-proofs your business model.

At Opinov8, we don't just follow trends; we help build the platforms that define them. Whether you need to rescue a stalled AI initiative, modernize your data estate, or build custom agentic workflows, our team is ready to bridge the gap between strategy and execution.

Six years ago, "Digital Transformation" was the buzzword everyone was chasing — mostly a frantic reaction to a world that forced us to move our operations online in a matter of weeks. But today, that initial migration is ancient history. We’ve moved past the novelty of just "having" digital tools; the real focus now is on how business technology allows those tools to work together without constant human hand-holding.

Looking at the current landscape, the benchmark for success has shifted. It’s no longer just about basic efficiency or cost-cutting. Instead, the leaders in the space are prioritizing resilience and a concept I like to call "intelligent orchestration" — building systems that can actually think and pivot on their own.

Here is a look at the eight fundamental ways the technological backbone of modern enterprise is being rewritten right now.

The era of asking an AI to write an email is behind us. We’ve entered the age of Agentic AI — systems that don’t just suggest content but execute tasks. Imagine an AI agent that notices a supply chain delay, identifies a secondary vendor, negotiates a spot-buy, and updates the ERP system without a human ever touching a keyboard. To make this work, companies are overhauling their data foundations through specialized AI and data consulting to ensure these agents have high-quality, real-time information to act upon.

Cloud computing is no longer a borderless frontier. Because of tightening data privacy laws and global tensions, we are seeing a massive surge in Sovereign Cloud architectures. Businesses are moving away from centralized global hubs toward localized, compliant infrastructures that keep data within specific jurisdictions. It’s a complex balancing act that requires a highly modular approach to cloud engineering to stay both agile and legal.

Quantum computing is moving from the lab to the real world, and with it comes a massive security risk: "Harvest now, decrypt later." Hackers are stealing encrypted data today, waiting for the day quantum processors can crack it. This has made Post-Quantum Cryptography (PQC) a priority. The National Institute of Standards and Technology (NIST) has already finalized its first set of quantum-safe standards, and savvy CTOs are already beginning the long process of crypto-agility migration.

Software engineering has changed fundamentally. We aren't seeing AI replace developers; we are seeing it act as a high-powered exoskeleton. By using AI to handle the "grunt work" of boilerplate code and unit testing, product engineering teams are now focusing almost entirely on high-level system architecture and user logic. This shift has shortened the gap between a business idea and a live product by nearly 40%.

"Green IT" is no longer a PR move — it’s a financial one. With carbon taxes and high energy costs, Carbon-Aware Computing has become a standard practice. This involves designing software that intentionally schedules energy-heavy data processing during hours when the local grid is powered by renewables. The Green Software Foundation is currently leading the charge in establishing these energy-efficient coding standards that are now a requirement for any enterprise-grade build.

Now that the hardware has finally caught up to the vision, Spatial Computing is delivering real ROI in industrial settings. We are seeing engineers use high-fidelity overlays to repair complex machinery or simulate warehouse floor layouts in real-time. This isn't the "metaverse" for consumers; it’s a functional Industrial Metaverse that uses Digital Twins to prevent mistakes before they happen in the physical world.

User experience (UX) is moving from reactive to anticipatory. Thanks to deep-learning models, apps and platforms no longer wait for a user to click a button — they predict the next action based on context, biometrics, and historical patterns. It’s the difference between a tool that waits for instructions and a partner that prepares the workspace for you.

The "monolithic" software era is dead. Modern enterprises are built like Legos. This Composable Architecture allows a company to swap out its payment provider, its CRM, or its AI engine without breaking the entire system. It’s the ultimate form of future-proofing, allowing businesses to pivot their strategy in days rather than months. Gartner’s research into composable business continues to show that this modularity is the single biggest predictor of market resilience.

Technology is moving faster than most internal teams can keep up with. The question isn't just about what tech to buy, but how to integrate these autonomous, sovereign, and sustainable systems into a strategy that actually drives revenue.

At Opinov8, we don’t just build software; we architect the systems that allow businesses to out-innovate their competition. Whether you’re looking to deploy agentic workflows or move toward a more composable architecture, our team is here to turn these technical evolutions into your competitive advantage.

Let’s talk about your next move! Get in touch with us to start a conversation. See our work in action and how we’ve solved complex engineering hurdles for our clients.

As the retail landscape evolves into a highly sophisticated, data-driven ecosystem, the stakes for the holiday season have never been higher. Recent industry analysis suggests that brands failing to modernize their infrastructure risk losing significant market share during peak trading periods.

In 2026, operational maturity is the new baseline. Retailers are moving beyond simple cloud adoption to leverage composable architectures and hybrid cloud infrastructures that offer unparalleled resilience. The cost of technical stagnation is steep; delaying the modernization of core systems can lead to inefficiencies that bleed revenue precisely when traffic is highest.

To combat volatility and capitalize on surges like Black Friday, brands must leverage the cloud not just for storage, but as an engine for optimization and scalability. Here are three strategic reasons why a cloud-native approach is essential for dominating this holiday season.

Customer experience (CX) is the primary battlefield for holiday retention. In an era where retail technology trends point toward "Agentic Commerce," where AI agents autonomously manage shopping journeys, consumers expect friction-free, personalized service across every touchpoint.

Leading retailers are blurring the lines between online and offline worlds. Store associates empowered with cloud-connected devices can now access real-time customer profiles and purchase history, acting as high-value consultants rather than just checkout staff. This capability relies heavily on AI in e-commerce, which processes vast datasets instantly to tailor interactions. By removing friction and offering immediate, context-aware service, brands can significantly boost conversion rates even during the wildest holiday rushes.

The "out of stock" notification is a conversion killer. Historically, siloed data caused lags between sales and inventory updates, but 2026 demands real-time synchronization. Modern supply chains are shifting toward total value delivery, where visibility and resilience are paramount.

When a customer buys an item online or scans it at a physical POS, the cloud instantly updates the global inventory ledger. This prevents overselling and enables dynamic reallocation — moving stock from low-traffic warehouses to high-demand locations instantly. Furthermore, cloud-native tools minimize human error by triggering reorders based on predictive demand modeling rather than reactive panic buying, ensuring shelf space is optimized for high-velocity items.

The financial argument for cloud adoption remains its most compelling. Legacy on-premise infrastructure forces retailers to pay for maximum capacity year-round, leaving up to 85% of computing power idle outside of Q4.

Cloud technology flips this model. By utilizing a cloud-managed platform, brands can leverage auto-scaling to spin up server capacity automatically during traffic spikes and scale down when demand normalizes. This "pay-as-you-go" elasticity drastically reduces IT overhead. According to recent market analysis, the shift toward these efficiency models is driving the retail cloud computing market to record highs, as brands reinvest savings into marketing and product innovation.

The gap between retail leaders and laggards is widening. Retailers utilizing modern cloud infrastructures are not just saving money; they are agile enough to pivot instantly to market demands. Whether ensuring 100% uptime during a flash sale or delivering a hyper-personalized offer, the cloud is the backbone of modern retail success.

Migrating to the cloud is more than an IT upgrade — it is a strategic business transformation. At Opinov8, we specialize in helping retail brands navigate complex digital journeys, from building composable commerce architectures to implementing advanced AI agents.

Contact us today to discuss how we can engineer your digital future and ensure you are ready for the holiday season and beyond.

RegTech used to be a back-office support function. In 2026, it is the backbone of enterprise resilience.

With autonomous AI and decentralized finance now standard, compliance isn't just about following rules — it’s a strategic differentiator. Organizations are pivoting from reactive box-ticking to proactive strategy, using these RegTech trends to turn regulatory pressure into a market advantage. Here is what is driving the industry this year.

We have moved past simple automation. 2026 is the year of Agentic AI — systems that don’t just flag risks but actively manage them. These agents interpret regulatory changes, adjust internal controls, and execute filings autonomously.

But power requires proof. With strict "Show Your Work" mandates now enforced globally, the "black box" model is dead. The priority for C-suites is Explainable AI (XAI) — building architectures that create an auditable logic trail for every automated decision.

Our approach: We help firms move from "black box" risks to transparent systems. Explore Opinov8’s AI Consulting and Data Services.

Annual sustainability reports are obsolete. Today’s market demands real-time verification.

The challenge isn't the commitment; it's the data. Specifically, Scope 3. Leaders are now using machine learning to synthesize unstructured data across their entire supply chain, turning messy vendor inputs into a defensible "Green Audit Trail." This is the only way to satisfy requirements like the CSRD without drowning in manual paperwork.

Related: See how we engineer Sustainability and ESG Tech to turn data into evidence.

For years, the rule was "know your customer." The problem? Storing that customer’s data made you a target.

Zero-Knowledge Proofs (ZKP) and Self-Sovereign Identity (SSI) have flipped the script. Firms can now verify a user's identity or eligibility without ever holding the sensitive PII (Personally Identifiable Information). It cuts onboarding friction and, crucially, removes the massive data liability from your servers.

Market Insight: Global RegTech spend is projected to hit $33B this year, with identity solutions driving nearly 40% of that growth.

Tokenized assets need tokenized rules. As real-world assets (RWAs) move on-chain, we are seeing the rise of RegChain — protocols that embed compliance directly into smart contracts.

This is "Policy-as-Code." A transaction simply cannot execute unless it satisfies the jurisdictional rules of both parties. The days of expensive post-trade reconciliation are ending because the trade is pre-validated by the code itself.

The wall between the CISO and the Compliance Officer has crumbled. With early-stage quantum threats emerging, Post-Quantum Cryptography (PQC) is becoming a standard line item in RegTech budgets.

Regulators now view cyber-resilience as a fiduciary duty. Modern dashboards don’t just show compliance status; they predict resilience against attacks, forcing firms to treat security and compliance as a single, unified discipline.

Deep Dive: Read our guide on preventing cyber attacks in financial services to see how these disciplines intersect.

The "cost of doing business" mentality is gone. In 2026, compliance is the foundation of trust.

The goal is Continuous Compliance — invisible, automated, and flawless. The companies that nail this won't just stay out of trouble; they will move faster than everyone else.

At Opinov8, we build the Fintech Software Solutions that power this transition. Ready to upgrade your architecture? Let's talk.

The reality of engineering in 2026 is that the line between a "security bug" and a "privacy violation" has completely evaporated. We’ve moved past the era where privacy was just a legal document buried in a footer. Today, if your DevSecOps pipeline isn't treating a data residency mismatch with the same urgency as a SQL injection, you’re already behind.

The "shift-left" movement has reached its logical conclusion: Continuous Privacy Engineering. It’s no longer enough to secure the perimeter; we have to secure the data’s right to exist (or be deleted) across increasingly fragmented global networks. Here is how the convergence of privacy and development is actually playing out on the ground this year.

For years, privacy was the "slow-down" department. Developers would build, security would scan, and then, at the very last second, legal would swoop in and flag a data sovereignty issue. In 2026, that friction is a business killer.

High-velocity teams have realized that privacy must be declarative. We are seeing a massive move toward Privacy-as-Code (PaC). By using declarative manifests to define how PII is handled, teams can catch violations during the build phase rather than during an audit. This is why modern software development now requires engineers to have a functional understanding of data ethics, not just syntax.

In the past, we focused on encryption at rest and in transit. Now, the challenge is privacy in use. With the proliferation of scalable cloud solutions that span multiple jurisdictions, managing data lineage has become the top priority for DevSecOps.

Let’s talk about the elephant in the room: Generative AI. By 2026, most enterprises will have integrated local LLMs into their workflows. But these models are data-hungry, and "unlearning" a user’s data from a trained weights set is a nightmare.

This is where advanced AI and Machine Learning services are pivoting. DevSecOps now includes "Model Sanitization" stages. Before any data hits a training pipeline, it passes through automated filters that strip PII and verify consent tokens. We’re also seeing the rise of Differential Privacy being baked directly into the data science workflow to ensure that even if a model is compromised, individual user identities remain mathematically shielded.

If you’re still relying solely on basic hashing, you’re vulnerable. The industry is moving toward more robust frameworks, often referencing the OWASP Top 10 Privacy Risks to identify where their pipelines are leaking "meta-privacy" (information about the data that is just as sensitive as the data itself).

Technologies like homomorphic encryption — once considered too computationally expensive—are finally becoming viable in specialized DevSecOps workflows, allowing us to process encrypted data without ever "seeing" it.

In 2026, privacy is no longer a hurdle to overcome; it’s a product feature that builds trust. The organizations winning today are the ones that stopped treating privacy as a series of rules and started treating it as a fundamental engineering discipline.

The goal is a "Zero Trust" approach to data, where the system assumes every piece of information is sensitive and requires explicit, code-governed permission to move.

The gap between "functional code" and "compliant code" is widening every day. At Opinov8, we help organizations bridge that gap by baking privacy directly into the architecture, ensuring your speed-to-market isn't compromised by regulatory debt. Let’s talk about your DevSecOps strategy.

The narrative surrounding Artificial Intelligence in travel and hospitality has fundamentally changed. We have moved past the experimental phase of simple chatbots and "digital novelty" into an era of Agentic AI and operational maturity. For industry leaders, the question is no longer if AI should be adopted, but how deep its neural networks should run through the veins of their organizations.

In a sector where experience is the currency, AI has evolved from a backend efficiency tool into the primary architect of the guest journey. This shift is not merely fueling disruption; it is establishing a new baseline for survival and growth in a hyper-competitive global market.

The "chatbots" of the early 2020s, often rigid and frustrating decision trees, have been replaced by Autonomous AI Agents. Unlike their predecessors, which simply retrieved information, these agents possess the agency to act. They negotiate, book, resolve, and modify complex itineraries across fragmented systems without human intervention.

As highlighted in recent industry analysis on remapping travel with agentic AI, the transition to agentic systems allows travel companies to automate complex, multi-step workflows, such as rebooking a cancelled flight while simultaneously updating hotel reservations and ground transport, in seconds rather than hours. This capability is transforming customer service from a cost center into a seamless, value-added experience.

Travelers today generate a massive digital exhaust — from search history and social sentiment to biometrics and spending patterns. The "disruption" here lies in shifting from reactive personalization to predictive personalization — offering a spa deal not because a guest checked in, but because the data indicates flight delays and high stress levels before they even arrive.

However, executing this level of granularity requires a robust backbone. You cannot build modern AI on legacy data silos. Success depends on the kind of resilient data engineering that ensures clean, real-time data flows to your models, turning raw information into actionable guest intelligence.

The margin for error in pricing has vanished. Modern AI algorithms now ingest data points far beyond historical booking curves, incorporating real-time weather events, local competitor pricing changes, and even macroeconomic indicators to adjust room rates and flight prices minute by minute.

This "total revenue management" approach ensures that inventory is not just sold, but sold to the right customer at the optimal price. Market intelligence leaders note that travel and hospitality trends are increasingly defined by this ability to utilize forward-looking demand data rather than relying on historical benchmarks to navigate market volatility.

Disruption is also visible in what we don't see. The interface of the future is increasingly invisible. Guests now expect "Smart Room Orchestration" where IoT sensors and AI collaborate to adjust lighting, temperature, and entertainment based on personal preferences stored in their loyalty profiles.

To achieve this, organizations must look beyond off-the-shelf tools. Through specialized AI consulting and data services, companies can build the custom architectures required to integrate these intelligent ecosystems. Furthermore, these systems optimize HVAC energy consumption in real-time based on occupancy, significantly reducing the carbon footprint of large hotel properties — a key factor for the eco-conscious traveler.

The travel and hospitality sector is uniquely positioned to leverage these advancements because it deals in high-frequency, high-emotion transactions. However, the gap between "tech-native" travel platforms and traditional hospitality providers is widening. According to the innovator’s guide to travel technology, the next wave involves technologies like Generative Engine Optimization (GEO), shifting focus from traditional SEO to ensuring your brand is recommended by AI assistants.

We are witnessing a wholesale re-architecture of the travel and hospitality landscape. The disruption fueled by AI is not a temporary wave; it is the rising tide. Organizations that successfully integrate agentic AI, predictive analytics, and robust data foundations will not only survive — they will define the standards of modern hospitality.

For deeper dives into the technologies shaping this future, specifically in computer vision and advanced analytics, we invite you to explore our expert insights and tech trends.

At Opinov8, we don't just talk about innovation; we build the engineering DNA that powers it. Whether you need to modernize your data infrastructure or deploy custom AI agents that redefine guest satisfaction, our team is ready to partner with you.

Let’s discuss your AI roadmap today.

The concept of the "smart hospital" has graduated from a futuristic pilot project to an operational necessity. As we move deeper into 2026, IoT technology, specifically the Internet of Medical Things (IoMT), is no longer just about connecting devices; it is about creating an autonomous, responsive nervous system for healthcare.

The days of siloed data and passive monitoring are behind us. Today, the convergence of Edge AI, hyper-connectivity, and advanced interoperability is shifting the focus from simply gathering patient data to acting on it in real-time. This shift is not just improving outcomes — it is rewriting the economic model of care delivery.

Here is how the next generation of IoT is actively improving the future of healthcare.

In the early 2020s, Remote Patient Monitoring (RPM) was a novelty. Now, it is the standard of care for chronic disease management. The distinction between the clinic and the living room has blurred. We are seeing a surge in medical-grade wearables, smart rings, continuous bi-hormonal pumps, and bio-stickers that stream high-fidelity data directly to clinical dashboards.

This capability supports the rapid expansion of "Hospital at Home" models. Patients recovering from surgery or managing complex conditions can now be monitored with ICU-level precision from their bedrooms. This requires robust, scalable infrastructure capable of handling massive data streams without latency — a challenge where cloud-managed services become critical for maintaining uptime and data integrity.

The most significant leap in 2026 is the integration of Generative AI directly into IoT workflows. We aren't just collecting heart rates anymore; we are running local AI models on devices (Edge AI) to detect anomalies instantly before data even leaves the patient's side.

These "AI Agents" can autonomously flag early signs of sepsis or cardiac events, filtering out noise and alerting clinicians only when human intervention is truly needed. This significantly reduces alarm fatigue, a major contributor to clinician burnout. According to recent insights from Wolters Kluwer, the governance of these AI tools has become a top priority for health system C-suites, ensuring that autonomous clinical agents remain accurate and trusted.

For a deeper dive into how algorithms are reshaping clinical workflows, read our analysis on AI in health care and its impact on investors and patients.

With billions of connected devices, from infusion pumps to smart elevators, the attack surface for hospitals has exploded. In 2026, cybersecurity is no longer an IT ticket; it is a patient safety mandate. A compromised IoT device isn't just a data breach; it can be a life-threatening service disruption.

Healthcare organizations are adopting "Zero Trust" architectures, where every device must continuously authenticate itself. The KLAS Research 2026 report highlights that visibility into device activity is now foundational, with vendors being evaluated on their ability to automate risk remediation.

Navigating this regulatory minefield, especially with the EU's strict frameworks, requires a partner who understands compliance and the AI Act inside and out.

Beyond clinical care, IoT is quietly revolutionizing hospital operations. "Digital Twins" — virtual replicas of physical hospital processes — allow administrators to simulate patient flow, optimize staffing, and track assets in real-time.

Smart inventory systems now automatically reorder supplies before they run out, and predictive maintenance sensors on MRI machines alert technicians to potential failures before they cause downtime. This level of operational intelligence is crucial for reducing the staggering waste often found in healthcare logistics.

The technology to save lives and streamline operations exists today. The challenge for 2026 is not hardware; it is integration. Health systems must bridge the gap between legacy on-premise systems and modern, cloud-native IoT ecosystems.

This is where the engineering reality meets the medical vision. Building a platform that is HIPAA-compliant, interoperable (FHIR), and capable of processing real-time data at scale requires more than just code — it requires deep industry context.

Opinov8 specializes in engineering these exact types of mission-critical platforms. From architecting secure data pipelines to building custom interfaces for clinical decision support, we help healthcare innovators turn complex IoT potential into a reliable, life-saving reality.

Ready to modernize your healthcare infrastructure? Let’s talk about your next project.

The financial services landscape has moved far beyond the era of static advertisements and one-way communication. As we navigate 2026, the intersection of finance and social media has matured into a sophisticated ecosystem where "social-first" is no longer a marketing strategy, but a fundamental business model.

Today’s fintech leaders are moving past the novelty of viral posts. They are now focusing on deep technical integration, algorithmic trust, and community-led growth to redefine how users interact with their capital.

In the early 2020s, fintechs used social media primarily for brand awareness. Today, the focus has shifted toward building "walled garden" communities and leveraging hyper-niche social circles. High-growth fintechs are moving away from broad-reach platforms and toward integrated social features within their own apps, or "super-app" environments.

According to recent analysis by McKinsey & Company on the future of retail banking, the democratization of finance is now driven by peer-to-peer validation rather than institutional authority. Modern users don’t just want to see a bank’s post; they want to see how their peers are utilizing specific investment vehicles or savings "buckets."

Fintechs are facilitating this by building social layers — allowing users to share portfolios, split bills via social handles, and participate in DAO-like governance structures for community-funded projects. This shift requires a robust fintech software development framework that can handle the complex data permissions and real-time synchronization required for social-financial interactions.

By 2026, the "influencer" model will have evolved. While human creators still hold sway, we are seeing the rise of AI-driven financial avatars and autonomous agents that provide personalized financial education across social channels.

These AI agents analyze social sentiment and individual spending habits to deliver "just-in-time" advice. For instance, if a user expresses a desire to travel on social media, a fintech’s integrated AI can instantly suggest a customized automated savings plan. This level of responsiveness depends on modern product engineering — creating systems that are not just reactive, but predictive.

The friction between "scrolling" and "spending" has completely vanished. Social commerce has transitioned from a trend to a trillion-dollar reality. As noted by Statista’s projections on global social commerce growth, the integration of checkout experiences within social platforms is now the standard.

Fintechs are the invisible engines behind these transactions. By leveraging Embedded Finance (Baas), they allow social platforms to offer instant credit (BNPL 2.0), insurance at the point of sale, and multi-currency wallets. The technical challenge has shifted from simply processing a transaction to ensuring that the security and compliance layers are invisible to the user but impenetrable to bad actors.

Perhaps the most significant challenge for fintechs in 2026 is maintaining "Signal vs. Noise" on social media. With the proliferation of AI-generated misinformation and sophisticated deepfake scams, fintech brands must act as the ultimate arbiters of truth.

Leading firms are now utilizing blockchain-verified credentials for their social communication. When a fintech brand posts an update or an executive shares an insight, it is accompanied by a cryptographic "seal of authenticity." This helps users distinguish between legitimate financial advice and "finfluencer" scams, which Finextra highlights as a continuing regulatory priority for global financial authorities.

Establishing this trust requires a commitment to digital transformation and transparency, ensuring that every digital touchpoint, from a TikTok clip to a customer support DM, is backed by enterprise-grade security.

In 2026, the DM (Direct Message) is the new front desk. Fintech users expect 24/7, high-fidelity support through the social apps they already inhabit. However, professional fintechs have moved beyond basic chatbots. They now use sophisticated Natural Language Processing (NLP) that can handle complex regulatory disclosures and personalized account inquiries within encrypted social channels.

This shift has turned social media from a marketing expense into a critical operational channel. It allows fintechs to reduce churn by addressing grievances in real-time and turning potential PR crises into demonstrations of superior customer service.

The convergence of finance and social media is no longer about "being online"; it is about being indispensable to the user’s digital lifestyle. As boundaries continue to blur, the fintechs that succeed will be those that view social media not as a megaphone, but as a sophisticated data-rich environment for value exchange.

Scaling these social-financial ecosystems requires more than just a creative marketing team — it requires a partner who understands the underlying architecture of modern finance. At Opinov8, we specialize in building high-performance, secure, and scalable solutions that allow fintechs to dominate the social-first landscape.

Whether you are looking to integrate embedded finance modules, leverage AI for social listening, or re-engineer your platform for the next generation of users, we provide the technical expertise to turn your vision into a market-leading reality. Let’s discuss how we can accelerate your fintech’s evolution.

Choosing the right technology consulting partner is one of the most critical decisions a business can make in its digital transformation journey. The choice often comes down to a fundamental dichotomy: engaging a Big Tech Consultancy, a global powerhouse known for scale and brand, or partnering with a Mid-Tier or Specialist Firm that promises agility and niche expertise.

This decision isn't just about budget; it's about the very nature of the solution you seek. As the digital landscape becomes more complex, especially with the accelerated adoption of Generative AI services and advanced Cloud Migration, understanding the distinct advantages and trade-offs of each model is essential for a successful outcome.

The global, often multi-disciplinary, consulting giants bring a formidable arsenal to the table. They are the established leaders, known for their comprehensive service offerings and unparalleled reach, frequently dominating large-scale IT modernization projects.

Smaller, boutique firms, often defined by having a staff of a few hundred or less, focus on depth rather than breadth. They thrive in specialized niches and offer a fundamentally different client experience, often concentrating on cutting-edge fields like Agentic AI or specific Cloud Architecture optimization.

Competitive Landscape: Opinov8 vs. The Industry Giants

To understand where value lies in the current market, it is helpful to compare a specialized mid-size firm like Opinov8 directly against the large-scale incumbents.

While the giants offer mass and coverage, Opinov8 is engineered for speed, engineering depth, and cost-efficiency.

| Aspect | Opinov8 | EPAM | Globant | Endava | Avenga | SoftServe |

| Company Size | Mid-size (~500–1,000 people) | Very large (60k+ employees, global) | Large (30k+ employees, global) | Large (11k+ employees, global) | Upper mid-size (6k+ specialists) | Large (12k+ employees, global) |

| Engagement Style | Flexible, fast, highly collaborative; lean governance | Structured, enterprise-grade, process-heavy; suited for very large programs | Design- & innovation-led, studio model; strong for big digital transformation programs | Consulting + agile delivery; strong account-based partnerships | Advisory + delivery, with strong focus on regulated industries (pharma, insurance) | Engineering-led with consulting & managed services; many long-term enterprise accounts |

| Cost | Typically more cost-effective and flexible on commercials (mid-size cost base) | Enterprise-level pricing; rates reflect brand, scale, and global coverage | Enterprise-level pricing; premium for brand, design, and AI capabilities | Enterprise-oriented pricing; optimized for long-term, multi-year engagements | Often competitive vs global giants, but above boutique levels | Enterprise-oriented pricing; strong value for large engineering footprints |

| Speed & Agility | Very fast decision-making, rapid delivery, and easy to escalate directly to leadership | Slower due to large org structure; strong governance and compliance for complex programs | Good speed for large initiatives, but smaller engagements can feel process-heavy | Balanced: agile teams but with enterprise governance in place | Reasonably agile; can be more process-driven where compliance/regulation is strict | Scaled agile; fast once set up, but POCs can be slower than with smaller firms |

| Customization | High—tailored solutions, co-creation with client teams, minimal one-size-fits-all | High but usually within corporate frameworks, reference architectures, and tooling | High, especially in digital products & customer experience; strong UX/studio playbooks | High in core verticals (payments, FS, TMT) where they have deep patterns and templates | High in pharma, life sciences, insurance, and Salesforce ecosystems | High technical customization with strong platform know-how (cloud, data, AI, DXPs) |

| Telco/BSS/OSS Depth & Industry Focus | Strong engineering + cloud + data capabilities; telco experience but not a pure telco/BSS integrator | Deep telco/BSS/OSS domain expertise; long history with Tier-1 operators | Strong in media, entertainment, sports, and customer experience; telco more via broader TMT, not pure BSS/OSS focus | Very fast decision-making, rapid delivery, and easy escalation directly to leadership | Very strong in pharma, life sciences, insurance, and automotive; limited public emphasis on telco/BSS/OSS | Broad cross-industry depth (finance, healthcare, retail, media) with advanced cloud/data/AI practices; not primarily branded as a telco/BSS specialist |

| Innovation | High agility; fast prototyping & POCs, especially around cloud, data, and AI | Very strong R&D resources, labs, and platforms, but execution can be slower due to scale | Positions itself as an AI & digital reinvention leader; heavy investment in AI Studios and AI Reinvention Network | Innovation focused on regulated sectors (pharma, insurance) and Salesforce/enterprise platforms; more practical engineering than pure lab R&D | Innovation focused on regulated sectors (pharma, insurance) and Salesforce / enterprise platforms; more practical engineering than pure lab R&D | Significant investments in cloud, big data, AI/ML, and Gen AI; large engineering labs and strong hyperscaler partnerships |

| Best Fit For | Companies needing flexibility, cost efficiency, and quick engineering ramp-up; mid-size enterprises or business units inside large groups | Very large enterprises needing huge teams, complex multi-year programs, and heavy governance | Global brands looking for large-scale digital product/experience reinvention, especially in media, sports, and consumer sectors | Banks, payments firms, and TMT players needing a domain-savvy partner with sizeable but agile teams | Pharma, life sciences, insurance, and automotive organizations that need regulated-industry expertise with nearshore/offshore delivery | Enterprises seeking a large Eastern-European-rooted engineering partner for cloud, data, and AI across many industries |

Beyond size, the distinct difference lies in innovation velocity and commercial flexibility:

The optimal choice depends entirely on the nature and context of your project.

In the current landscape, the most successful enterprises often use a blended approach:

At Opinov8, we are engineered for the modern challenge: bridging the gap between high-level Digital Transformation Consulting and expert, hands-on engineering execution.

We deliver the deep, specialized technical expertise of a boutique firm at the speed and scale required for ambitious projects. Unlike the giants, we don't just provide resources; we provide outcomes.

If you are looking to integrate advanced Generative AI Services, accelerate a secure Cloud Migration, or need a specialized team for Product Engineering, partner with us. We ensure your technology strategy is directly tied to measurable business value, free from the bureaucracy of the "Big Tech" machinery.

Ready to accelerate your technical roadmap? Contact Opinov8 to explore how our specialized expertise can deliver enterprise-grade results for your most complex challenges.

Computer Vision (CV) has moved far beyond academic research. It is now the backbone of automation in industries where visual data drives critical decisions — manufacturing, logistics, retail, healthcare, sports, fintech, and more. As companies accelerate their AI transformation, the ability to turn images and video into actionable insights is becoming a strategic advantage.

Recent breakthroughs have dramatically expanded what's possible:

Equally important, advances in edge computing have made it possible to deploy sophisticated CV models directly on cameras, IoT devices, and embedded systems — reducing latency, cutting cloud costs, and addressing data privacy concerns. Modern enterprises now choose between cloud-based processing for heavy workloads and edge deployment for real-time, on-device inference — or combine both in hybrid architectures.

These advances, combined with scalable data pipelines and MLOps practices, have pushed CV into mainstream enterprise adoption. From detecting anomalies on production lines to understanding consumer behavior and powering real-time analytics, CV is redefining operational efficiency.

At its core, Computer Vision enables machines to extract structured, actionable information from images and video — processing visual data faster, more consistently, and at far greater scale than manual review ever could.

Modern CV systems perform a range of specialized tasks, each solving distinct business problems:

When integrated with robust data engineering, cloud-native or edge-native infrastructure, and continuous model improvement pipelines, CV becomes a catalyst for automation and intelligent decision-making across the enterprise.

Computer Vision solutions enable factories to shift from manual inspection to predictive quality control, catching defects early and reducing downtime. Automated anomaly detection and real-time monitoring help organizations improve throughput and minimize waste. Edge-deployed models allow inspection at the production line with sub-second latency, while cloud-based systems handle batch analysis and model retraining.

Retailers use CV to optimize layouts, analyze customer traffic, automate checkout, and enhance loss prevention. Eye-tracking analytics, in-store heatmaps, and automated stock monitoring help teams run smarter operations. Foundation models like CLIP now enable flexible product recognition without extensive retraining for each new SKU.

CV supports barcode recognition, pallet identification, inventory tracking, and container monitoring. With edge-based models deployed on warehouse cameras and handheld devices, logistics companies gain real-time visibility without relying on constant cloud connectivity — improving resilience and reducing operational costs.

From early disease detection to surgical assistance, Computer Vision supports clinical decision-making and reduces diagnostic gaps. Segmentation models help radiologists identify tumors, while pose estimation aids in physical therapy assessments.

CV powers player tracking, automated highlight generation, tactical analytics, and personalized fan experiences — a rapidly growing market across both the US and Europe. Pose estimation and object tracking enable detailed performance analysis previously impossible without expensive manual annotation.

Companies achieving real results with CV invest in strong engineering foundations. Computer Vision isn't a standalone tool; it is part of a larger AI engineering ecosystem that requires:

One of the most important architectural decisions in CV projects is where inference happens. Many enterprise CV systems now use hybrid architectures: edge devices handle real-time inference and filtering, while cloud infrastructure manages model training, analytics aggregation, and heavy batch processing.

| Feature | Cloud Deployment | Edge Deployment |

| Latency | Higher (Network round-trip) | Very low (On-device) |

| Cost Model | Pay per inference | Fixed hardware cost |

| Data Privacy | Data leaves the premises | Data stays local (Better privacy) |

| Compute Power | Unlimited (Good for complex models) | Constrained by device capabilities |

| Connectivity | Requires a stable connection | Works offline |

Even with rapid progress, enterprises must navigate specific hurdles. This is where a strong engineering partner becomes essential to balance:

At Opinov8, we support organizations across the US, UK, EU, and global markets in building enterprise-grade AI and Computer Vision systems that drive measurable business outcomes.

Our expertise includes:

At every step, Opinov8 helps companies transform visual data into operational intelligence through reliable, scalable, and secure Computer Vision solutions.

As AI becomes the operating system of modern business, Computer Vision stands out as one of the most practical, ROI-positive applications. Companies that adopt CV today build stronger, faster, smarter organizations — while those that hesitate risk falling behind competitors already using visual intelligence to drive decisions.

If you're exploring computer vision development, AI engineering, or digital transformation, Opinov8 is ready to help.

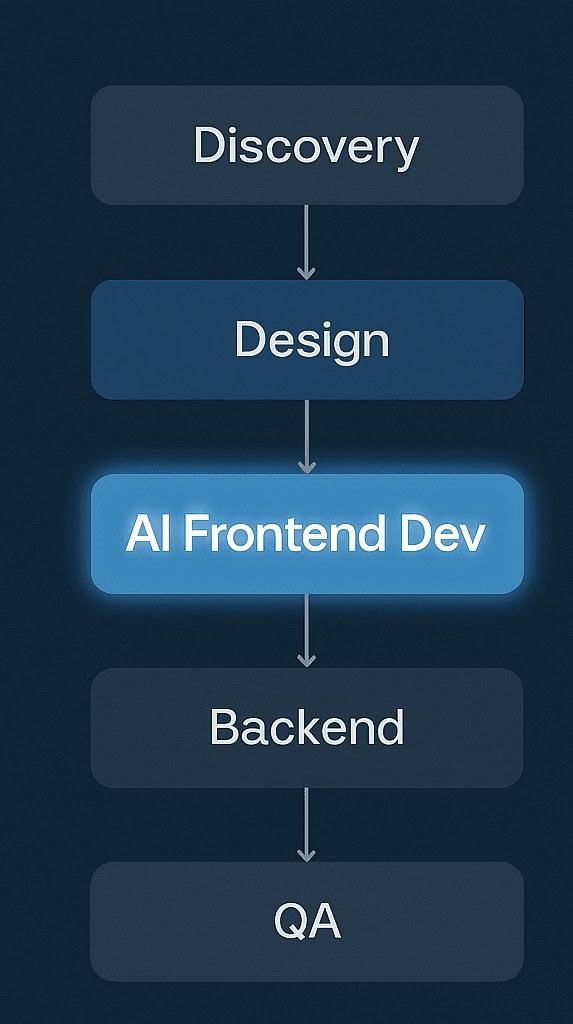

Deploying market-ready software today requires a Strategic Quality Engine, doing far more than simply "checking if it works." In an era defined by hyper-automation and AI-native applications, the definition of quality has expanded. It is no longer a final hurdle before release; it is the very backbone of digital trust.

Industry reports suggest that despite the ubiquity of AI coding assistants, the cost of quality — or rather, the cost of poor quality — remains a massive line item. IT departments continue to allocate significant budgets to quality assurance (QA), not just to find bugs, but to safeguard brand reputation and revenue. In a fast-paced DevOps environment, the ability to predict defects before they happen is the new competitive advantage.

When organizations view testing as a mere checkbox, they risk more than just technical debt. They risk delivering a fragmented user experience (UX) that drives customers away in seconds. Furthermore, cutting corners on validation leaves critical security gaps—a vulnerability no enterprise can afford in the current threat landscape.

This is where Testing as a Service (TaaS) has evolved from a simple outsourcing model into a strategic capability. TaaS is no longer just about "extra hands"; it is about accessing a scalable, AI-empowered quality infrastructure that accelerates delivery without compromising resilience.

The traditional approach to software testing — linear, manual, and reactive — is obsolete. Modern software development demands a "Shift-Left" and "Shift-Right" hybrid model. We are testing earlier in the design phase to prevent architectural defects, and testing continuously in production to monitor real-world resilience.

This complexity is why leading enterprises are turning to specialized partners. By leveraging TaaS, organizations can bypass the steep learning curve of new tools and immediately tap into advanced methodologies.

The most significant shift in 2026 is the integration of Artificial Intelligence (AI) and Machine Learning (ML) into the QA lifecycle. We aren't just running scripts anymore; we are deploying autonomous AI agents that can generate test data, heal broken test scripts automatically, and predict high-risk areas based on code changes. According to recent industry insights on autonomous testing trends, these AI "colleagues" allow human testers to focus on complex, creative problem-solving rather than rote execution.

In the age of DevSecOps, security cannot be an afterthought. It must be woven into the fabric of the delivery pipeline. TaaS providers bring specialized expertise in continuous security validation, ensuring that compliance and data protection are checked with every commit. Understanding the depth of web application security assessments is crucial for protecting customer data against increasingly sophisticated cyber threats.

One of the primary drivers for TaaS adoption is elasticity. Building an internal infrastructure capable of simulating millions of users or diverse device environments is cost-prohibitive. TaaS offers a cloud-native environment where resources can be spun up or down instantly. This aligns perfectly with the need to automate your DevOps process, ensuring that your testing capacity grows in lockstep with your development velocity.

Partnering with a technology service provider like Opinov8 for TaaS allows your internal teams to return to their core competency: innovation. While your developers focus on building the next generation of features, the TaaS partner ensures that the foundation is solid.

As noted in the 2025 State of Testing Report, the industry is facing a pivotal moment where adopting these advanced tools is separating market leaders from laggards. The focus has shifted from finding bugs to ensuring value.

At Opinov8, we treat Quality Assurance not as a phase, but as a culture. We follow a rigorous Quality Assurance framework that blends the precision of automation with the intuition of expert manual testing.

Our approach integrates seamlessly into your existing DevOps structure. We provide a full view of the testing process — transparency is key. You aren't just getting a "pass/fail" report; you are getting insights into why issues occurred and how to prevent them. By utilizing best-in-class automated tools and AI-enhanced workflows, we speed up the entire cycle, ensuring your product is robust, secure, and ready for the demands of the modern user.

In a market where trust is the ultimate currency — a sentiment echoed by Forrester’s predictions for the coming year — delivering flawless digital experiences is the only option.

Your software is the face of your business. Don't leave its quality to chance or outdated processes.

If you are ready to modernize your QA strategy and ensure your product delivers on its promise, let’s talk. Opinov8 can help you build a testing ecosystem that scales with your ambition.

Recent advancements in Generative AI and Large Language Models (LLMs) have fundamentally reshaped how brands interact with consumers. We have moved far beyond the rigid, scripted chatbots of the past decade. Today, customers interact with intelligent, emotionally aware AI agents that understand context, nuance, and intent. As these technologies mature, they are no longer just a novelty — they are a critical driver of competitive advantage and brand equity.

This evolution is what we recognize today as the mature state of conversational commerce: a seamless, omnichannel ecosystem where sales, support, and personalized advisory services converge within natural dialogue.

When the concept was first defined by Chris Messina, conversational commerce was largely about convenience — meeting customers where they were, primarily in messaging apps. In the current market, the scope has expanded dramatically. It is no longer just about text; it involves voice assistants, multimodal interactions (combining text, voice, and visual inputs), and "agentic" capabilities where AI actively performs tasks on behalf of the user.

For brands, this means the entire sales funnel, from initial discovery and inspiration to the final transaction, can occur within a single, fluid interaction. By leveraging AI-driven e-commerce strategies, companies can provide a level of service previously possible only with human concierges, but at infinite scale.

The core of modern brand value lies in relevance. A generic response today is often perceived as a failure of customer service. Advanced conversational systems utilize real-time data to tailor every interaction. Rather than asking a customer for their preferences repeatedly, the system instantly recalls purchase history, browsing behavior, and even sentiment from previous interactions.

This capability relies heavily on intelligent data analysis. When an AI agent processes user data in milliseconds, it doesn't just retrieve a file; it synthesizes a solution. For instance, instead of simply listing products, it might say, "Based on the hiking gear you bought last season, this new lightweight jacket would be a perfect addition for your upcoming trip." This predictive approach transforms the dynamic from transactional to relational.

Industry leaders are seeing this shift across the board. According to recent insights on transformative e-commerce trends, the ability of AI to act as a personal shopper is one of the most significant factors driving customer loyalty in the digital age.

True conversational commerce is platform-agnostic. A conversation might start on a smart speaker in the kitchen, continue via a messaging app during a commute, and conclude with a visual confirmation on a desktop. The transition must be invisible to the user.

This "zero-friction" environment is vital for both B2C and B2B sectors. In the B2B space specifically, the evolution of B2B buying experiences shows a growing preference for rep-free, self-serve interactions that still retain a consultative feel. AI agents bridge this gap, offering instant technical specs, quote adjustments, and inventory checks without the wait times associated with human sales teams.

Implementing these advanced systems is not without its complexities. It requires a robust infrastructure capable of handling unstructured data and ensuring privacy compliance while delivering instant responses. Many organizations face difficulties in unifying their legacy systems with modern AI architectures.

Successfully overcoming AI integration challenges is often the differentiator between a brand that merely uses a chatbot and one that truly embodies conversational commerce. It involves rigorous testing, continuous learning loops (RLHF), and a strategic approach to data governance. The payoff, however, is substantial. Diverse generative AI use cases, from automated returns handling to dynamic upselling, prove that when done correctly, the ROI extends beyond sales to include customer retention and brand advocacy.

Conversational commerce has graduated from an experimental channel to a primary pillar of digital strategy. It offers a unique opportunity to demonstrate respect for the consumer's time and intelligence. By deploying AI that listens, understands, and anticipates needs, brands can build a reputation for innovation and reliability.

In an era where technology moves faster than ever, having a partner who understands the intricacies of digital evolution is essential. At Opinov8, we specialize in engineering the digital future, helping enterprises navigate the complexities of AI adoption and platform modernization. Whether you are looking to refine your data strategy or build a bespoke conversational ecosystem, our team is ready to help you unlock new value.

Get in touch with Opinov8 to discuss your digital future

Cloud migration is often viewed through a lens of technical architecture — latency reduction, scalability, and data sovereignty. However, the most sophisticated cloud infrastructure will fail to deliver return on investment if the people managing it are left behind. As organizations transition from legacy on-premises systems to dynamic, multi-cloud environments, the human element becomes the single greatest determinant of success.

A purely technological approach often leads to "cloud chaos" — uncontrolled costs, security vulnerabilities, and operational silos. To navigate this complexity, leaders must prioritize a comprehensive workforce transformation strategy that parallels their technical roadmap.

The shift to the cloud is not merely a change in hosting; it is a fundamental shift in mindset. It requires moving away from rigid, ticket-based operations toward agile, collaborative workflows. Clear communication is the bedrock of this cultural pivot. Leaders must articulate not just what the cloud is, but why it matters — explaining how it accelerates innovation, enhances data resilience, and secures the organization's future.

This involves dismantling the barriers between development and operations. By shifting from traditional IT to DevOps paradigms, teams gain a shared understanding of responsibility. Engineers must understand that in the cloud, infrastructure is code, and operational efficiency is everyone’s job.

If your current workforce lacks proficiency in microservices, containerization, or serverless architectures, the instinctive reaction might be to replace them. However, wholesale replacement is often a strategic misstep. Institutional knowledge — the deep understanding of your business logic and customer needs — is irreplaceable.

Instead, invest in upskilling your loyal employees who demonstrate high adaptability. Establishing a Cloud Center of Excellence (CCoE) is a proven best practice. A CCoE creates a centralized governance function that not only sets standards but also acts as a knowledge hub, curating training programs and mentoring staff. This approach turns your migration into a learning opportunity, boosting retention and morale.

Even with aggressive upskilling, specific gaps will remain, particularly in niche areas like AI integration, FinOps, or advanced cryptography. The evolving cloud computing landscape demands specialized roles that are increasingly difficult to source.